Researchers aren’t horsing around with program to detect equine facial keypoints

As technology advances to make life more comfortable for humans, the need for developments in animal welfare also grows. While a computer with automated expression recognition for an animal’s face may seem far-fetched, a third-year computer science Ph.D. student at UC Davis has completed the first step to creating such a program.

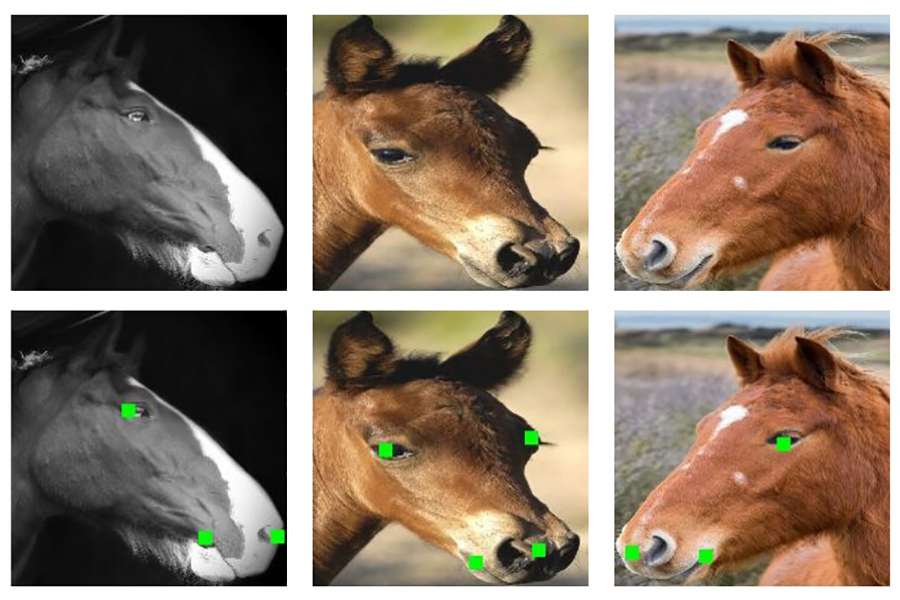

Maheen Rashid, along with her advisor Yong Jae Lee and intern Xiuye Gu, worked on a computer program that can identify “keypoints” on a horse’s face, which will assist her research in a program that can detect equine expressions of pain. These points determine aspects of an image that are of importance to the program.

“[Keypoints] identify points that have some kind of word or meaning attached to them, like the eyes or the nose or the mouth corners,” Rashid said. “They could also be points that don’t necessarily have any semantic meaning attached to them. For example, for humans, there can be 68 keypoints on a human face, and every one of those keypoints doesn’t have a name attached to it, but those keypoints can still be very important for telling if the eyes are open or shut or what type of smile the person has.”

Claudia Sonder, the director of equine outreach at the Center for Equine Health, oversees the projects using around 200 horses housed there for research due to their extensive medical records. She stated that minute changes in facial muscles are involuntary and are key to noticing a problem before other, more obvious traits are expressed. Horse owners and experienced veterinarians may still miss signs that a computer could catch.

“Facial recognition of pain is something that involves looking at the horses remotely to document the position of their ears, their eyelids, their nostrils and their mouth,” Sonder said. “In order to do that in any kind of high volume, you need a computer involved because a computer can measure those parameters more accurately and more quickly than the human eye. The initial goal of this team was to train the computer to recognize a horse’s face. Computers have, for quite some time, recognized a child’s face, for example, and a computer can detect subtle changes in facial expression in children that are not commonly detectable by the human eye.”

Some keypoints on a face, whether it is a horse, human, or otherwise, include the eyes, mouth corners and nostrils. However, it takes “training” for a program to automatically detect these points by “showing” the program many examples.

“If you’ve never seen any horses before in your life, and I want you to be able to identify what horses are, I can show you pictures of horses and say, ‘This is a picture of a horse,’” Rashid said. “Hopefully, after seeing a bunch of pictures of horses, you could identify a horse that you’ve never seen before. Training a program to perform a specific task ends up roughly meaning something similar to that, where you try to give the program a series of positive examples of something, and try to get it to extrapolate from those positive examples. In my case, it was being able to detect keypoints and the positive examples were images of animals with keypoints marked on them already.”

Since there were not enough images of horses with these keypoints marked, Rashid and her associates trained the program using human faces due to the large amount of data the program needed to learn to detect keypoints.

“When it is trying to adapt to horse faces, it’s a difficult job to do because horse faces look so different than human faces,” Rashid said. “We figured that we can make the job easier for the network by moving horse faces closer, in appearance, to the type of data that it’s used to seeing, which is human faces. When we ‘warp’ horse faces, we’re basically trying to make horse faces look more human-like, and we’re using these ‘warped’ faces to train the network and make it adapt to horse faces.”

Many researchers are involved in the project, including some from UC Berkeley and UC San Diego as well as some from Sweden and Denmark. Pia Haubro Andersen, a professor of large animal surgery at the Swedish University of Agricultural Sciences, was inspired by a paper she read in 2014. Sonder said she was contacted in 2015, while Rashid was brought on the project in 2016.

“Ten years ago, our research changed from looking at pain as a physiological response to pain as a behavioral response,” Andersen said. “At the same time, people started looking at the facial expressions in laboratory animals, and it was actually the nurses that saw a mouse with small eyes, its ears back, its little whiskers pointing and [could tell that] it was in pain.”

One of Andersen’s Ph.D. students at the time said that horses must have had this “pained face” as well and described small changes in the horse’s appearance. Though this may seem trivial, horses are known to be stoic animals who attempt to hide their pain from humans.

“What does a horse think of you, as an observer?” Andersen said. “Is it afraid of you, is it scared of you, or are you in good relations with the horse? If I’m the owner observing the horse, the horse trusts me. When you move the horse to a hospital, a lot of strange people are there and a lot of strange things are going on. For example, as a veterinarian, you look at the horse exactly where it hurts, and it scares the horse because it’s a prey animal. In a hospital, the horse believes that it is surrounded by hostiles.”

Even around its owner or other familiar humans, Sonder stated that horses still tend to withhold pain responses. Some uncontrolled tells include a horse’s ear position, the shape of their eyes and eyelids, whether the nostril is dilated, or the contour of its face.

“[Horses] are animals that evolved over millions of years to get away from predators, but also be strong,” Sonder said. “Many of us are not looking closely enough at them to detect these subtle changes. For example, a horse may walk up, and it has its ears forward. It may even take a treat from you, yet it’s experiencing pain. The horse will act cheerful and meet our thresholds for a normal exam. But if you look closely, the involuntary muscles in their face are telling us that there’s something wrong.”

This can be compared to some people’s tendencies to hide their pain from people and how others can detect subtle shifts in expression. However, veterinarians rely on these small changes for examinations, since humans cannot directly communicate with animals.

“If human observers are going to underestimate the pain that animals are in, then it means that animals are going to be in pain for longer until they’re diagnosed,” Rashid said. “It also means that there could be diseases that get really bad before they are diagnosed, so you end up spending a lot of money in order to cure an animal, and an animal may suffer for longer because you just didn’t know the animal was in pain.”

The next piece to this puzzle is getting Rashid’s keypoint detection program to notice these microexpressions.

“I think keypoint detection is a first in a series of projects, so I’m working on expression recognition now,” Rashid said. “Getting expression recognition to work on static images versus videos are two very different problems, so there more that I’m going to have to work on to get this to be actually usable.”

Both Andersen and Sonder have expressed their excitement to be able to use an expression recognition program to better the care for all animals. The implications of this project are not limited to improving animal welfare, but also touch upon aspects of academic and non-academic life.

“When we started with this, the automated recognition was just a tool for me,” Andersen said. “I wanted this tool because I wanted better pain management, and I wanted earlier diagnoses. And I still want that. But after [being] entered into this interdisciplinary collaboration with cognitive scientists and computer vision people, I think there are so many more applications that you could work on with animals. Sometimes, I say to my students, ‘Maybe computer vision is the closest we get to ‘talk’ to the animal, to understand what is it that animals ‘want’ or ‘don’t want’.’ We simply hadn’t been able to contain all the data and information, but suddenly we have computers to analyze them for us.”

Though this is only the beginning of this expression recognition project, there are already noticeable changes for those involved and high hopes for the future.

“It’s changed how I practice veterinary medicine,” Sonder said. “I feel that when I approach a patient, I have a much greater ability to detect pain, and it’s changed how I’ve made some of my decisions. But one thing that’s really important is that all of this research has to be validated, meaning, at some point, we’re going to have to prove that the equine pained face is relatively consistent for certain types of pain. That’s going to take time.”

Written by: Jack Carrillo Concordia — science@theaggie.org